Search Engine Optimisation (SEO) is kind of a marriage between engineering and marketing. Several people think SEO is all about placing the keywords to rank higher on the search pages.

There are many standard practices and guidelines for ensuring that the pages rank higher on search engines.

But what if, after all the work, web crawlers of search engines such as Google or Bing cannot even see your webpage? This is a real problem that could impact Single Page Apps or, more specifically, apps utilising the App Shell model.

In this blog, we will go over how WealthDesk solved the SEO issues despite following App Shell architecture across our web properties.

The problem

WealthDesk hosts not only its websites but also powers dozens of partners using our microsites. To do this scalably, we have followed an App Shell model that helps display the right content in real-time, based on the context.

But this gave rise to an unexpected problem: Google’s web crawlers were not even able to see any content on our websites.

The issue faced by web crawlers

To understand this problem, we have to understand how search engines work. Search engines build a picture of any website using specialised programs called web crawlers. These web crawlers load websites in an in-memory browser, pick up all the links on the page and store a snapshot of the page. After this, they move on to the next link in the queue.

This system has worked pretty great for the past 25 years for search engines such as Google. However, due to their dynamic nature, web pages and applications built on modern Javascript frameworks or App Shell models may not be SEO friendly. The web crawlers of most search engines will not spend computational resources to render these web pages completely. And the search engine may end up thinking that the page just says “Loading…” like in the screenshot above.

Note that the same page would load perfectly well on your phone or computer.

In its developer documentation, Google clearly states, “Currently, it’s difficult to process JavaScript and not all search engine crawlers are able to process it successfully or immediately.”

To fix this problem, we considered three possible solutions.

The possible solutions

- Make our website static/templatised

Making the website static is a simple and effective solution. However, the static pages do not work with React MicroFrontEnds (MFEs) that help us scale and power our Embedded WealthDesk Gateway offering. Maintaining two different versions of the websites would also be very resource-intensive.

- Server-Side Rendering (SSR)

SSR is one of the most common methods used to display information on web pages. In SSR, the full HTML for the page is rendered on the server itself by doing some of the work that your browser does. This helps achieve fast First Paint and Time To Interactive for the users.

Though it may sound appealing, SSR has its share of drawbacks. One of the most critical issues is that SSR is very resource-intensive and requires significant infrastructural changes and costs. This can create several constraints on budgets and the workforce. Another drawback is that you can not render third-party Javascript server side.

Another issue with SSR is the performance penalty. Many server rendering solutions don’t flush early and can delay Time To First Byte or double the data being sent (e.g. inlined state used by JS on the client). In React, renderToString() can be slow as it’s synchronous and single-threaded.

- Dynamic pre-rendering

Google recommends using dynamic pre-rendering as a potential solution for web crawlers to process Javascript-heavy pages.

Dynamic pre-rendering involves creating a version of the web pages specifically for the search engine bots (web crawlers) and another one for the users.

Dynamic pre-rendering can be implemented with almost no UI code changes and replicated across many web properties with little changes to the infrastructure. This saves a lot of costs and bandwidth. Several Pre-rendering as a Service products, like prender.io, make it easier to implement.

So let’s see what dynamic pre-rendering is and how it works for WealthDesk.

What is dynamic pre-rendering?

Google has stated that “Dynamic rendering means switching between client-side rendered and pre-rendered content for specific user agents.”

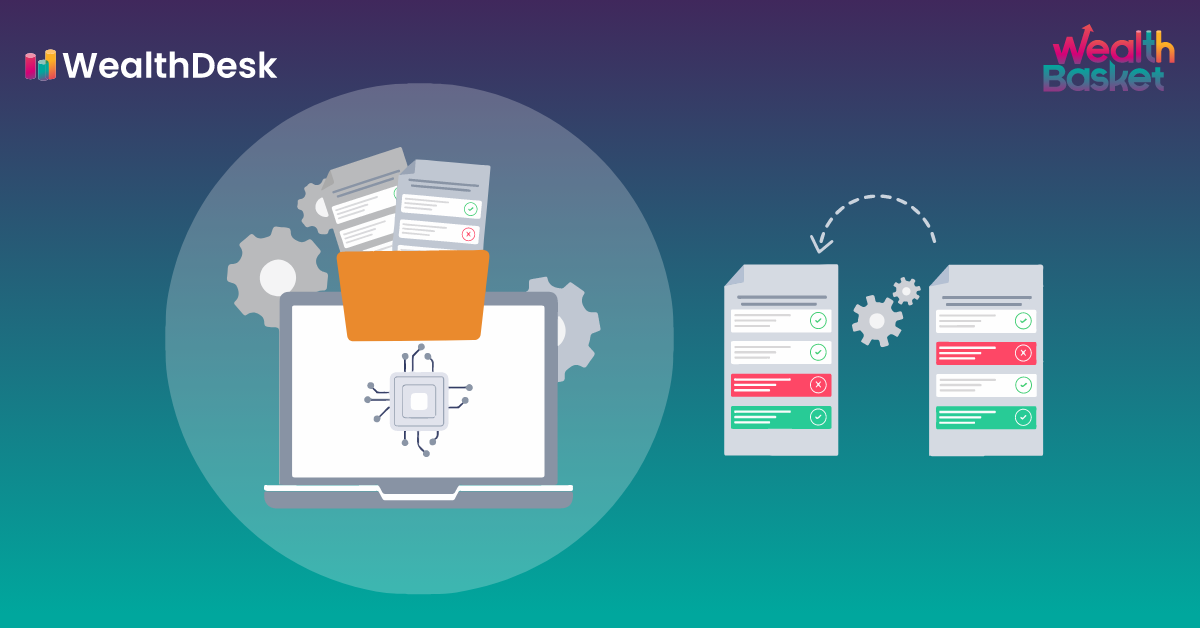

As discussed above, two versions of a webpage are created in dynamic pre-rendering. One of the web pages will be a pre-rendered version which is sent to the search engine bots. The other version is the normal client-side version which is sent directly to the users.

How does dynamic pre-rendering work?

For the client-side, the initial HTML + Javascript web pages are directly pushed to the user’s browser from the server.

For the crawlers, the initial HTML + Javascript webpages are converted to static HTML versions that are preferred by search engine bots. The conversion can be done through a Pre-rendering as a Service product like Prerender.io or using products such as Puppeteer and Rendertron. Once converted, these HTML versions allow the bots to fully access, crawl and index the content for search engines.

How have we implemented pre-rendering at WealthDesk?

Most of our web properties are served from AWS CloudFront as our Content Delivery Network (CDN), which uses AWS S3 buckets to serve our static resources (HTML/JS/CSS files).

To implement pre-rendering, we had to detect when a request was coming from Googlebot or any other automated bot and redirect the request to prerender.io. In a traditional web server, we could do that in code. But how do we do that when CloudFront is acting as our server?

We use the Function associations feature of CloudFront. You could attach a lambda function to be executed on specific stages that a request goes through, as per the following image:

We’d have to associate one Lambda@edge on the viewer request stage to detect the user agent of the incoming request and one Lambda@edge on the origin request stage to redirect it to prerender.io instead of our S3 buckets.

But since every single request hitting on the web server was going to at least trigger the viewer request stage, we were talking about millions and millions of Lambda executions. This would obviously cost significant money and introduce latency.

Recently announced AWS Cloudfront Functions came to our rescue. They come with significant restrictions as compared to Lambda@edge functions but are 1/6th the cost and much faster. This made them the ideal choice for viewer request association.

Hooking up all of this together, we were able to get Googlebot to see the same page above somewhat like this:

Note: If you are wondering about the broken icon in the screenshot above: it is unavoidable due to resource quota limitations of the crawlers. But, since this page has all the content we intend to get indexed by Google, this is great progress!

Further effort

Further work that was required to complete this effort:

- We used CloudFormation StackSets that can be replicated easily across properties, accounts and regions. This means that any improvements or bug fixes can be instantly deployed to all our partner microsites.

- On some pages, we had to make minor changes in Javascript code to ensure prerender.io can detect when the page has completed loading. This may only be needed in extremely heavy pages.

Conclusion

The dynamic pre-rendering helped WealthDesk to make sure search engines were indexing correct content on our Javascript-heavy or App Shell web pages. Using the process mentioned above, we were able to scalably solve this problem across dozens of independent websites with a near-zero effort from frontend developers.